中文教程文档:http://webmagic.io/docs/zh/

1. 简介 WebMagic 是一款简单灵活的爬虫框架。Scrapy,而实现则应用了HttpClient、Jsoup等Java世界最成熟的工具,目标就是做一个Java语言Web爬虫的教科书般的实现。Downloader、PageProcessor、Scheduler、Pipeline)构成,核心代码非常简单,主要是将这些组件结合并完成多线程的任务。

2. 核心组件

Downloader PageProcessor Scheduler Pipeline

3. 爬虫 demo × 2 依赖

1 2 3 4 5 6 7 8 9 10 11 <dependency > <groupId > us.codecraft</groupId > <artifactId > webmagic-core</artifactId > <version > 0.7.3</version > </dependency > <dependency > <groupId > us.codecraft</groupId > <artifactId > webmagic-extension</artifactId > <version > 0.7.3</version > </dependency >

3.1 博客园 - 爬虫demo 博客园:https://www.cnblogs.com/

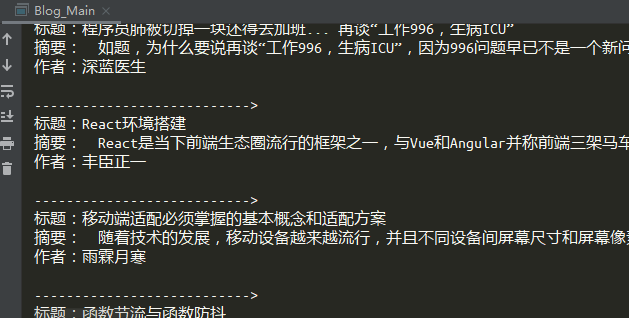

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 import us.codecraft.webmagic.Page;import us.codecraft.webmagic.Site;import us.codecraft.webmagic.processor.PageProcessor;import us.codecraft.webmagic.selector.Selectable;import java.util.List;public class BlogPage implements PageProcessor {@Override public void process (Page page) {"div#post_list div.post_item" ).nodes();"--------------------------->" );"标题:" + s.css("div.post_item_body h3 a" , "text" ).get());"摘要:" + s.css("div.post_item_body p.post_item_summary" , "text" ));"作者:" + s.css("div.post_item_body div.post_item_foot a" , "text" ).get());@Override public Site getSite () {return Site.me().setRetryTimes(500 ).setSleepTime(200 ).setTimeOut(5000 );

1 2 3 4 5 6 7 8 9 10 11 12 import us.codecraft.webmagic.Spider;public class Blog_Main {public static void main (String[] args) {String url = "https://www.cnblogs.com/" ;new Spider (new BlogPage ())3 )

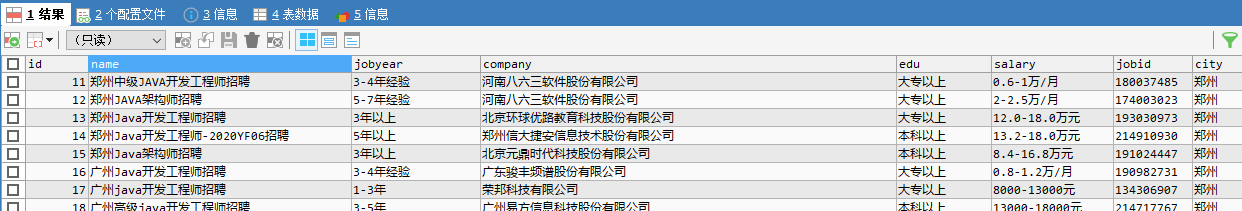

3.2 职友集 - 爬虫demo 职友集:https://www.jobui.com/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 public class JobPage implements PageProcessor {@Override public void process (Page page) {"div.j-recommendJob div.c-joblist" ).nodes();new ArrayList <>();new Job ();"div.job-content-box div.job-content div.job-segmetation a h3" ,"title" ).get());"div.job-content-box div.job-content div.job-segmetation div.job-desc span" ,"text" ).all().get(0 ));"div.job-content-box div.job-content div.job-segmetation div.job-desc span" ,"text" ).all().get(1 ));"div.job-content-box div.job-content div.job-segmetation div.job-desc span.job-pay-text" ,"text" ).get());"div.job-content-box div.job-content div.jobsegmetation a.job-company-name" ,"text" ).get());"jobs" ,jobs);@Override public Site getSite () {return Site.me().setRetryTimes(500 ).addHeader("User-Agent" ,"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36" ).setSleepTime(200 ).setTimeOut(5000 );

1 2 3 4 5 6 7 8 9 10 11 12 13 14 create database db_job2001;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 <dependency > <groupId > com.baomidou</groupId > <artifactId > mybatis-plus-boot-starter</artifactId > <version > 3.3.2</version > </dependency > <dependency > <groupId > mysql</groupId > <artifactId > mysql-connector-java</artifactId > </dependency > <dependency > <groupId > com.alibaba</groupId > <artifactId > druid</artifactId > <version > 1.1.22</version > </dependency >

1 2 3 4 5 6 7 spring: datasource: driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3306/数据库名?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8 username: root password: 123456 type: com.alibaba.druid.pool.DruidDataSource

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 @Data @TableName("t_zyjjob") public class Job {@TableId(type = IdType.AUTO) private Integer id;private String name;private String jobid;private String city;private String jobyear;private String edu;private String salary;private String company;private Date stime;private Date ctime;

1 public interface JobDao extends BaseMapper <Job> { }

1 public interface JobService extends IService <Job> { }

1 2 @Service public class JobServiceImpl extends ServiceImpl <JobDao, Job> implements JobService { }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;import com.baomidou.mybatisplus.extension.api.R;import com.baomidou.mybatisplus.extension.plugins.pagination.Page;import com.demo.webmagic.entity.Job;import com.demo.webmagic.service.JobService;import com.demo.webmagic.spider.JobPage;import com.demo.webmagic.spider.JobPipeline;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.web.bind.annotation.GetMapping;import org.springframework.web.bind.annotation.PathVariable;import org.springframework.web.bind.annotation.RestController;import us.codecraft.webmagic.Spider;import java.util.List;@RestController public class JobController {@Autowired private JobService service;@Autowired private JobPage jobPage;@Autowired private JobPipeline pipeline;@GetMapping("/api/job/all") public R<List<Job>> all () {QueryWrapper queryWrapper = new QueryWrapper <>();"stime" );return R.ok(service.list(queryWrapper));@GetMapping("/api/job/page/{page}/{count}") public R<Page<Job>> page (@PathVariable int page, @PathVariable int count) {new Page <>(page, count);QueryWrapper queryWrapper = new QueryWrapper <>();"stime" );return R.ok(service.page(page1, queryWrapper));@GetMapping("/api/spider/startjob/{city}") public R<String> start (@PathVariable String city) {new Spider (jobPage).addPipeline(pipeline).addUrl("https://www.jobui.com/jobs?jobKw=Java&cityKw=" + city).thread(3 ).start();return R.ok("OK" );

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 import java.util.Calendar;import java.util.Date;public class DateUtil {public static Date parseTimeV1 (String msg) {Calendar calendar = Calendar.getInstance();if (msg != null && msg.length() > 0 ) {if (msg.indexOf('天' ) > -1 ) {0 , msg.indexOf('天' ))));else if (msg.indexOf('小' ) > -1 ) {0 , msg.indexOf('小' ))));return calendar.getTime();

Page页面数据获取 + Pipeline管道处理

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 import com.demo.webmagic.entity.Job;import com.demo.webmagic.util.DateUtil;import org.springframework.stereotype.Component;import us.codecraft.webmagic.Page;import us.codecraft.webmagic.Site;import us.codecraft.webmagic.processor.PageProcessor;import us.codecraft.webmagic.selector.Selectable;import java.util.ArrayList;import java.util.Date;import java.util.List;@Component public class JobPage implements PageProcessor {private String city;public void setCity (String city) {this .city = city;@Override public void process (Page page) {"div.j-recommendJob div.c-job-list" ).nodes();new ArrayList <>();Job job = new Job ();"div.job-content-box div.job-content div.job-segmetation a h3" , "title" ).get());"div.job-content-box div.job-content div.job-segmetation div.job-desc span" , "text" ).all().get(0 ));"div.job-content-box div.job-content div.job-segmetation div.job-desc span" , "text" ).all().get(1 ));"div.job-content-box div.job-content div.job-segmetation div.job-desc span.job-pay-text" , "text" ).get());"div.job-content-box div.job-content div.job-segmetation a.job-company-name" , "text" ).get());"div.job-content-box div.job-addition-box div.job-icon-box span" , "data-positionid" ).get());"div.job-content-box div.job-addition-box div.job-add-date" , "text" ).get()));new Date ());"jobs" , jobs);if (page.getUrl().get().equals("https://www.jobui.com/jobs?jobKw=Java&cityKw=" + city)) {new ArrayList <>();String u = "https://www.jobui.com/jobs?jobKw=Java&cityKw=" + getClass() + "&n=" ;for (int i = 2 ; i <= 50 ; i++) {@Override public Site getSite () {return Site.me().setRetryTimes(500 ).addHeader("User-Agent" , "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36" ).setSleepTime(200 ).setTimeOut(5000 );

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 import com.demo.webmagic.entity.Job;import com.demo.webmagic.service.JobService;import lombok.extern.slf4j.Slf4j;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.stereotype.Component;import us.codecraft.webmagic.ResultItems;import us.codecraft.webmagic.Task;import us.codecraft.webmagic.pipeline.Pipeline;import java.util.List;@Component @Slf4j public class JobPipeline implements Pipeline {@Autowired private JobService service;@Override public void process (ResultItems resultItems, Task task) {"jobs" );if (service.saveBatch(jobList)) {"操作成功" );else {"爬虫数据添加失败" );

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 import com.baomidou.mybatisplus.extension.plugins.PaginationInterceptor;import com.baomidou.mybatisplus.extension.plugins.pagination.optimize.JsqlParserCountOptimize;import org.mybatis.spring.annotation.MapperScan;import org.springframework.boot.SpringApplication;import org.springframework.boot.autoconfigure.SpringBootApplication;import org.springframework.context.annotation.Bean;import org.springframework.transaction.annotation.EnableTransactionManagement;@SpringBootApplication @MapperScan(basePackages = "com.demo.webmagic.dao") @EnableTransactionManagement public class WebmagicApplication {public static void main (String[] args) {@Bean public PaginationInterceptor paginationInterceptor () {PaginationInterceptor paginationInterceptor = new PaginationInterceptor ();new JsqlParserCountOptimize (true ));return paginationInterceptor;